This post is about using an algorithm designed to mimic natural selection to find the formula for an unknown polynomial.

I started with a random polynomial of degree 5 with integer coefficients between -10 and 10.

I picked the formula to test the program with as:

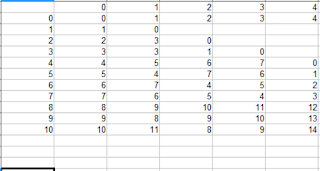

All my program ever knew about the above equation, called the Target Equation, is what its value was at 20 test points between -5 and 5.

So the algorithm starts with picking 100 completely random equations with integer coefficients between ( -10 and 10).

For example one my program picks might be:

Now to compare this random equation with the Target equation, I take what this one above evaluates to at a point and subtract what the Target evaluates to and then square that difference. Like say the target was yt at x=-3.5 and this random one is yr at x=-3.5 so the difference is (yt-yr) and then square that. Then add all those differences squared up for every point in the 20 evenly spaced points between -5 and 5 to get the total "fitness".

So then out of those 100, it picks the 10 with the best fitness. By best I mean those with the fitness closest to 0 or the best matches to the Target equation.

Then it takes those 10 equations and produces offspring equations by averaging every possible pair together. That's just adding the two equations together and then dividing by 2. But I only want equations with integer coefficients so round the coefficients to an integer. Like the offspring of x^2 + 2 and 3*x^2 + 3 would be (x^2 + 2 + 3*x^2+3)/2 which rounds to 2*x^2+2. So for the 10 equations I had I now have those 10 and 45 more that come from every possible pair out of those 10. To this I had 55 random "new blood" equations to keep the population at 100.

Now there is a mutation rate, which I set to be once a generation it makes a "mistake" and one of the coefficients just gets a random number instead of what it would be by averaging the formulas. More on this below.

So now I have 100 equations, and I take the best 10 of those the way I did before, and then breed 45 new ones and add 55 random ones that I take the best 10 of those and on and on...

So the Target equation was:

100 generations later member of the population with the best fitness was:

It had already arrived at the biggest 2 terms correctly. After 1000 generations one of the population matched the Target exactly.

But, this is on a modern computer, doing those 1,000 generations only took about 15 seconds. I compared it with simply generating random equations until one happened to match and it took 500 seconds and hadn't reached the correct answer yet. That's a pretty good speed up, if my solution space were a lot bigger that could mean the difference between 15 hours and 500 hours or 20 days.

Anyway it was interesting designing the algorithm because I had to add in the mutation rate and the "new blood" step to keep from getting stuck at a certain best fitness and never getting to the right answer. I imagine that probably has an analogy in actual genetic selection.